Model 5

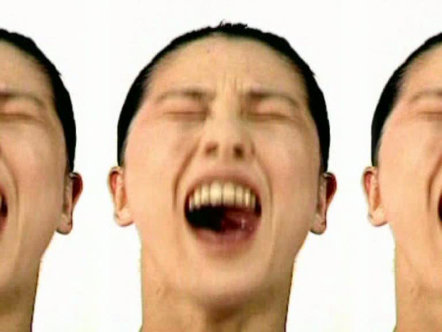

Sample session performed by Akemi Takeya

© Granular Synthesis, courtesy the artists

For the live performances of the audio/video installation Model 5 (1994–1996), the artist duo Granular Synthesis allows digitally processed image and sound elements from four video output channels and eight audio outputs (four screens) to interact, producing a multisensual perceptual experience. The duo’s name says it all; here, the granular synthesis process means that a videographic recording is separated into units of information and subsequently sampled and resynthesized.

In Model 5, the previously recorded image/sound material of the Japanese performer Akemi Takeya is separated into its smallest processable elements in an analytical process, and then, in a reconstruction process, reassembled in another frequency, so that the image and sound fragments that are produced by means of this recombination deviate from the continuity of the depiction in the source material in a clearly audible and visible way. The synchronicity and stability of the image and the sound, which are present in the recorded video and necessary for the depiction, are dissolved. By means of granular synthesis, new connections are now produced in which, while the sequence of the visual interacts with the auditory and is simultaneously or synchronously controllable, the electronic course of the image and sound is no longer synchronized in the conventional way: image and sound are separated, blurred, and perceived erratically like flickers. Furthermore, the newly produced frequencies are modulated live, causing the performance to underscore the direct correspondence between processual image and sound control.

In Model 5, the audience perceives this intervention into the audiovisual material by means of granular synthesis as violent and painful, because the artists dissect the voice and the portrait of Takeya. Her natural rhythm is eliminated and replaced by a mechanical rhythm in the sequence control. In effect, a mathematical operation of digital analysis is applied to a video recording of the performance by Takeya, with the collision of the images and sounds revealing the production process of electronic as well as digital image and sound control. Whereas the base video material stands for continuity in the performance (which in the electronic medium is not mandatory), the digital editing level of the live presentation is used to make us aware of the media level of the variable organization of visual and auditory information. The audience perceives this level as disruptive, because synchronicity has been removed within the visual and the auditory while at the same time one experiences the synchronicity between the recombined image and sound. This occurs by means of a motion control process, which connects video sampling with the eight MIDI-controlled audio output channels.

The predominant abstraction in the overall result of the video sampling is similar to a flicker effect in a video sequencer, with the difference, however, that the image sources are not edited linearly. The impression of the musicalization of the visual is produced in the simultaneity of performed auditory and visual variability, and is structurally related to Nam June Paik’s early interventions in the wave forms of the electronic medium.

- original Title: Model 5

- Date: 1994 – 1996

- Genre: Audio/visual installation