Vocal EEG sonification

© Thomas Hermann,

Gerold Baier, Ulrich Stephani and Helge Ritter

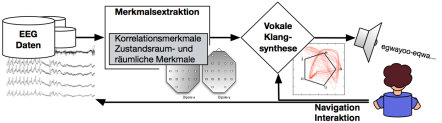

The vocal sonification of electroencephalograms (EEGs) by Thomas Hermann (neuroinformatics scientist), Gerold Baier (biophysicist), Ulrich Stephani (physician), and Helge Ritter (neuroinformatics scientist) is one of the most interesting findings of long-term research efforts to improve evaluation of EEGs by means of sonification and to establish this technology in clinical settings. A variety of imaging methods are used nowadays to study the human brain, yet the EEG continues to be the preferred means of observing the dynamic processes of, for example, epileptic fits as high-resolution electrical potentials over time. The source data consist of a variable number of channels with partially correlating signals, which require considerable expertise for a diagnosis based on pure observation. The constant transformation of rhythmic, dynamic states characteristic of EEGs predestines them for acoustic interpretation, as the human ear allows us to detect rhythmic variations in high temporal resolution.

In vocal sonification, specific features are first extracted from the EEG and these are subsequently used, by means of selective parameter mapping, to control the synthesis of vowel sounds and thus generate auditory forms that correspond with the features of the multivariate EEG signals. This occurs by means of the variation of two formants, which are filtered out of white noise or periodic impulses. The sound therefore continuously varies, especially between the vowels a–e–i and the characteristics of voiced and unvoiced. Vowels have the advantage that they are familiar to the human ear; for this reason, auditory gestalt formation, which differentiates the phenomena, takes place immediately. Due to the fact that they can easily be imitated by the human voice, they also allow communication about the audibly distinguished signal variations. The selected examples present typical rhythmic phenomena of epileptic fits with varied sound parameters.

An updated version of the principle presented here can be found at http://www.icad.org/Proceedings/2008/HermannBaier2008.pdf, accessed August 1, 2009.

- original Title: EEG-Vokalsonifikation

- Date: 2006 – (?)

- Genre: Medical procedure